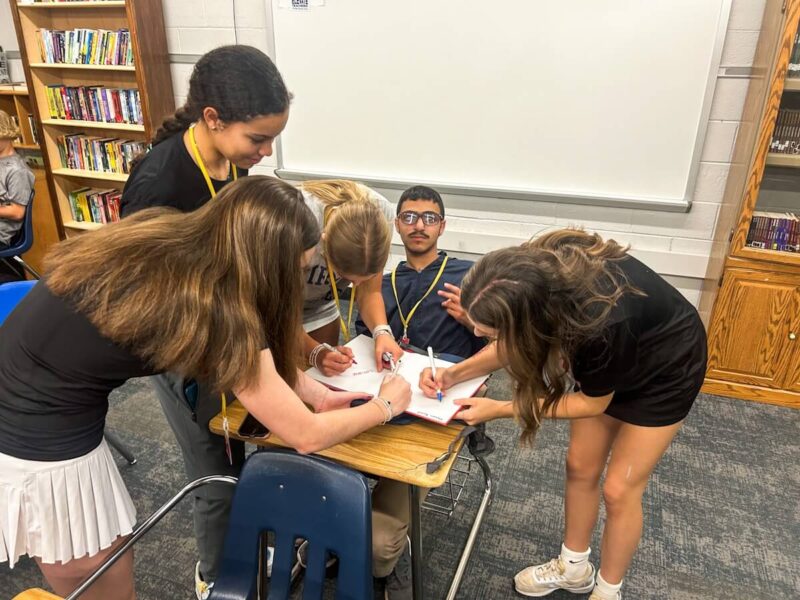

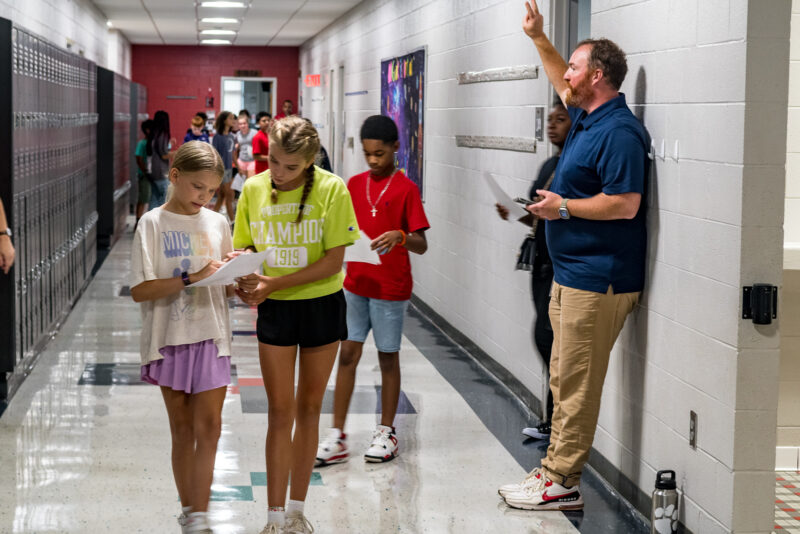

These three kids are among my best workers. Z, the boy in the middle, wasn’t the best worker last year.

This year, he is. When I told the seventh-grade administrator about the change, she threw her arms up and proclaimed, “Hallelujah!”

These three kids are among my best workers. Z, the boy in the middle, wasn’t the best worker last year.

This year, he is. When I told the seventh-grade administrator about the change, she threw her arms up and proclaimed, “Hallelujah!”

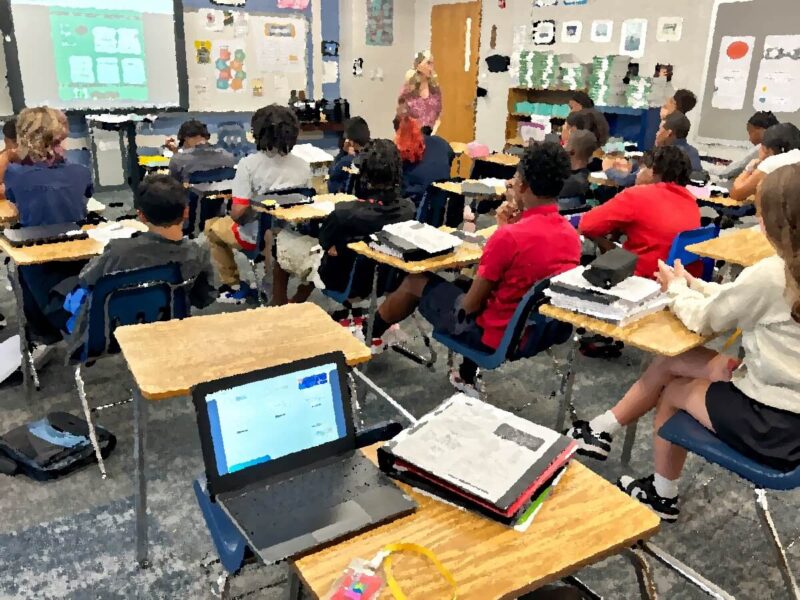

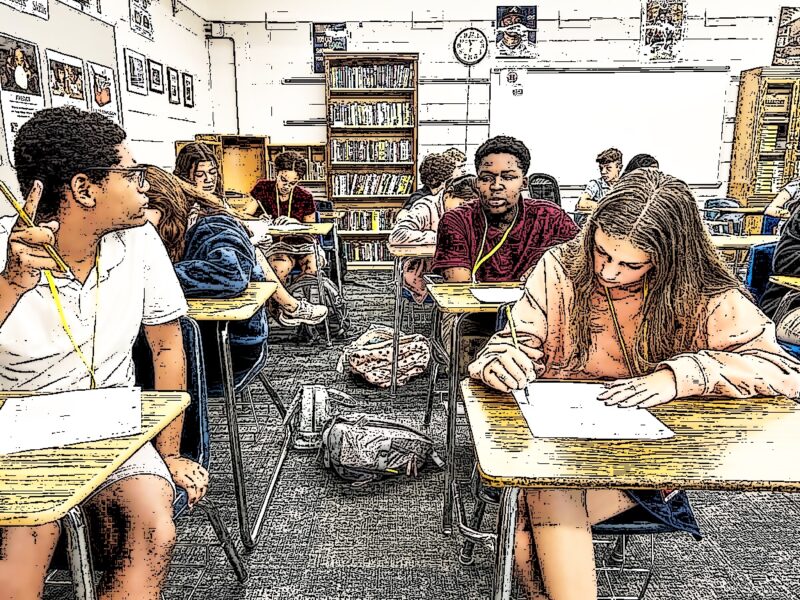

English I students continued with their parts of speech review, getting out of the traditional order and skipping from adjective to prepositions in order to help students identify prepositional phrases. This will help them with all the other parts of speech, especially since we’re going to be covering active/passive when we get to verbs later this week.

English 8 students continued with the district-designed unit on argument based on the newly adopted textbook looking at argumentative writing. We’re looking at a second article dealing with automation and employment: this article makes the opposite claim as last week’s article “The Automation Paradox.”

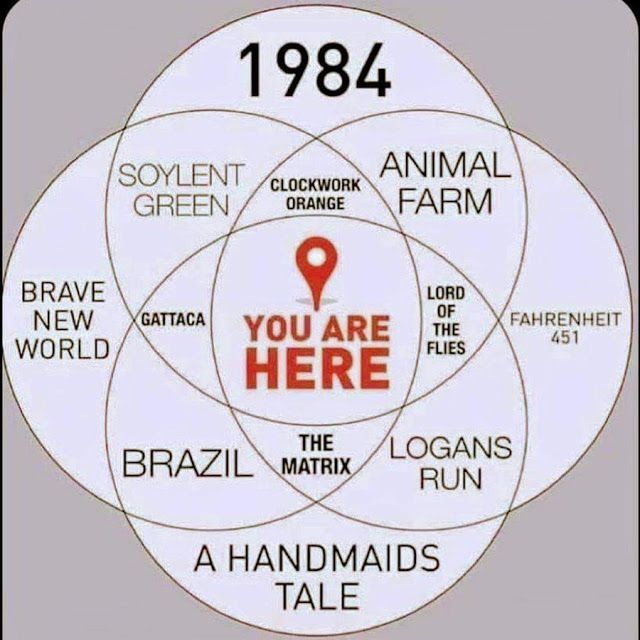

South Carolina Regulation 43-170 has been wreaking havoc on education this year, and few are more directly affected than humanities teachers. It reads, in part, “Instructional

Material is not “Age and Developmentally Appropriate” for any age or age group of children if it includes descriptions or visual depictions of “sexual conduct,” as that term is defined by Section 16-15-305(C)(1).”

In turn, Section 16-15-305(C)(1) reads:

(1) “sexual conduct” means:

(a) vaginal, anal, or oral intercourse, whether actual or simulated, normal or perverted, whether between human beings, animals, or a combination thereof;

(b) masturbation, excretory functions, or lewd exhibition, actual or simulated, of the genitals, pubic hair, anus, vulva, or female breast nipples including male or female genitals in a state of sexual stimulation or arousal or covered male genitals in a discernably turgid state;

(c) an act or condition that depicts actual or simulated bestiality, sado-masochistic abuse, meaning flagellation or torture by or upon a person who is nude or clad in undergarments or in a costume which reveals the pubic hair, anus, vulva, genitals, or female breast nipples, or the condition of being fettered, bound, or otherwise physically restrained on the part of the one so clothed;

(d) an act or condition that depicts actual or simulated touching, caressing, or fondling of, or other similar physical contact with, the covered or exposed genitals, pubic or anal regions, or female breast nipple, whether alone or between humans, animals, or a human and an animal, of the same or opposite sex, in an act of actual or apparent sexual stimulation or gratification; or

(e) an act or condition that depicts the insertion of any part of a person’s body, other than the male sexual organ, or of any object into another person’s anus or vagina, except when done as part of a recognized medical procedure.

This is in the 2023 South Carolina Code of Laws, Title 16 (Crimes and Offenses),

Chapter 15 (Offenses Against Morality And Decency) Section 16-15-305 (Disseminating, procuring or promoting obscenity unlawful; definitions; penalties; obscene material designated contraband).

So this morning, I walked into the teacher’s workroom this morning to put my lunch in the refrigerator, and the drama teacher was making copies.

“Are you still able to teach Romeo and Juliet?” she asked.

I told her that as far as I knew, we were still able to teach it. It is, after all, in the textbook the South Carolina Department of Education approved. I asked her what she meant.

“We’re getting word that his plays are a bit too controversial, and we might not be able to act them anymore,” she explained.

Pretty much.

The Honors kids are working through a parts-of-speech review, and today we went over pronouns. (Not for the whole class, mind you — we only spend about 15 minutes per day working on this. Otherwise, it would be numbingly boring for everyone, including me.) Students were identifying demonstrative, interrogative, and relative pronouns, and number five was a question, an excellent opportunity to see for interrogative pronouns.

“Let’s skip to five,” I said, giving them a moment to read it. “The first pronoun in that sentence — can anyone identify it?”

A smart young lady raised her hand. “What,” she replied correctly.

And then it hit me — there’s always a joke of the day. I like to make the kids laugh, though most of my jokes make them groan. But here was a chance to recreate a classic.

“Number five,” I repeated. “The first pronoun.”

“What,” she repeated, a little confused.

“I’m asking you — the first pronoun in number five.” I had to phrase the next part just right. “It’s what?”

“Yes.”

“Yes, what?”

“It’s what,” she confirmed, her eyebrows furrowing a bit more, smiles starting to appear around the room.

“What?”

“Number five?”

“Yes. I’m asking you. The first pronoun.”

“What.” She was starting to catch on here.

“The first pronoun!” I let a little faux frustration creep into my voice. “Look at number five and identify the first pronoun.”

“It’s what!” a full smile as she had caught on at that point.

“Why are you asking me?! I know what it is. I want to see if you know. What is it?”

“Yes!” Now she had it.

“Yes, what?!”

“Exactly!“

By now everyone was giggling, including her.

“Does anyone know what we just recreated?” I asked.

“Who’s on first?” came a voice from the back.

“Very good!” And our first brain break of the day was to watch the first few minutes of that classic.

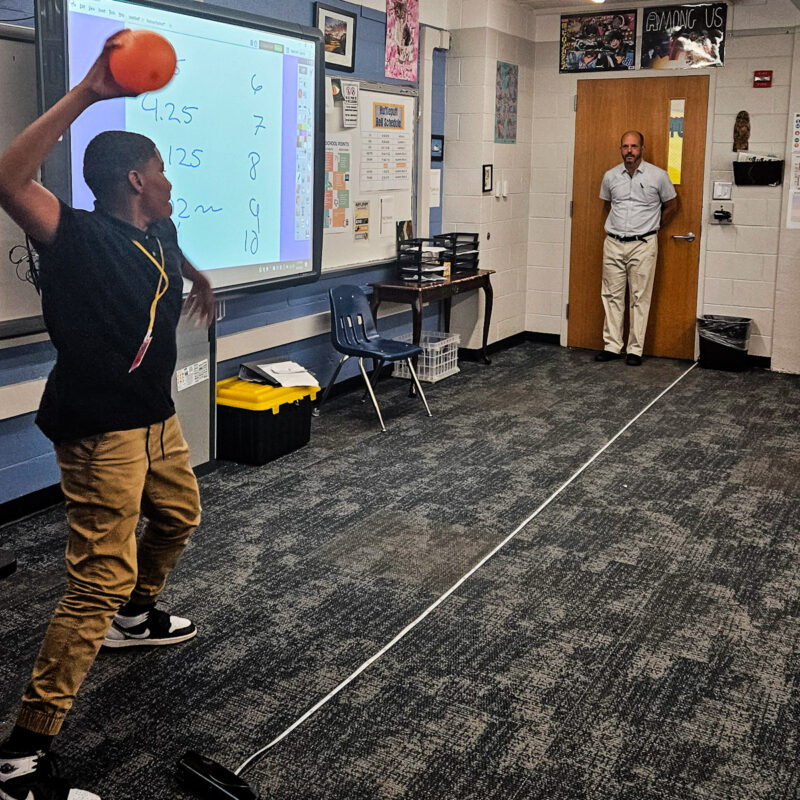

In English 8, we’re off to our next set of required readings. I have very little say in what I teach English 8 this year, and a lot of the materials are too difficult for my students and too — quite frankly — boring to get their interest. Our piece is called “The Automation Paradox,” and so we did a little pre-teaching today so kids know what a paradox is. To that end, I introduced them to Zeno’s Paradox. We did some measuring, completed a little math, and I convinced them that the math was solid: the arrow should have never hit Zeno.

So then we tested the theory with a ball. Which students took turns throwing at me. I was fairly sure they would hit me quickly, but I failed to take into account the light weight of the ball and the tendency of smooth light balls not to travel in a straight line. So most of the throws missed.

But the point was made. And the kids had a blast throwing that silly ball at me.

This is what makes middle school so fun: they’re cognitively developed enough that we can get into some abstract thinking and still childlike enough to enjoy being silly.

There’s a caterwauling feline I’m tossing around above my head that seems determined not to be part of this juggling act, and I can’t really blame it: after all, I’m also juggling a set of kitchen knives and a chainsaw, along with some greasy ball bearings and a blob of slime one of my students made, and they’re all getting tangled up in the random arcs in which they sail over my head. Every time the cat comes into my grasp, I get a fresh set of deep gouges as the cat’s claws rip into my skin. At the same time, I have to worry about the slices the knife blades so desperately want to inflict on my arms, and the chainsaw seems determined to take off one appendage or another. The greasy bearings and slime are just the last lovely touch as they somehow make my hands simultaneously slippery and sticky, complicating the entire process. And so I’m bound to drop something.

I have two classes of eighth-grade on-level English. In one class, I have seven students of such low English ability that I’m supposed to make alternative versions of most things we do. In both classes, there are also students with special education requirements that are similar. Some of these kids need only a little help; others need a lot. So for multiple-choice tests, I make three versions: one with four possible answers per question, one with three possible answers per question, and a final one with two possible answers per question. Once I make these tests, I have to make sure that the right student gets the right test, which can be particularly challenging since most of them are supposed to be administered electronically so that we have “data” to assess. (I put that in quotes because a) it’s representative of the foundation, indeed, cornerstone of edu-speak these days as and a result, b) I absolutely loathe it.) So what happens if I give

The shells on the beach just at the edge of the surf were visible for only a few moments before the white bubbles and turbulence hid them again. In the brief time I could clearly see them in the shallow water, it was obvious most of the shells were only fragments, often smaller than the smallest coins, slivers well on their way to becoming grains of sand. Every now and then, a shard would catch my eye, and I would think, “I might try to grab that one” just before incoming wave hid them once again. By then it was too late: once the water cleared up, the tide would have tkane the shard so far away from its original position that finding it was all but impossible. Another might catch my eye, but then the process would simply repeat itself.

To get a shell required calm and patience followed by a paradoxical ability to move quickly when needed. Hesitation meant the loss of the moment. In some ways, that’s a metaphor for live in general for many people. Everything is about getting the right moment, and when that fails, increased stress is the outcome.

Yet the older I get, the more I realize the error in living like that, the unnecessary stress it causes. Yes, I might not get that exact shell that I wanted, but there were plenty of other shells that were just as lovely, often more so.

I know today’s meeting with a counselor was very important for students so they would have an idea what to expect in the soon-coming high school registration process. I know they need to know this stuff. But do they have to learn it in my class? A class that is tested by the state? A high-stakes class?

I’ve written often enough, I suppose, about how my Saturday rhythm has changed over the last forty years or so. Saturday once meant church, seclusion, no work, no socializing with non-church folks, no sports, no school-related activities. Nothing that could pollute our minds or get our focus away from our sect’s teachings.

Saturday afternoon at 2:30 we met at the IBEW (International Brotherhood of Electrical Workers) union hall. We usually arrived at least an hour earlier, and stayed at least an hour past the 4:30 end time. Every Saturday afternoon, a two-hour meeting during which men of dubious theological education pontificated about the conspiracy theories that comprised the bulk of the organization’s theology. The only saving grace was the playing (and later, as a teenager, socializing) that took place before and after the meeting.

These days, my Saturdays are so much more fluid. Sometimes, there’s a clear outline to the day, with chores in the yard occupying much of my time. Once school starts, I send a fair amount of the morning grading students’ work. Today, for example, I went through 43 kids’ single-paragraph analysis of “The Cask of Amontillado.” They wrote things like this:

The narrator’s story can be trusted because Montressor is confessing his actions to the priest on his deathbed. For example, Montressor talks to the preist because he knows the “nature of [his] soul.” and would not believe that he “gave utterance to a threat”. This proves that the priest knows Montressor very well, probably because the same priest would come to his house often. The priest also would not suppose Montressor killed someone. He would most likely want to admit his wrong doings before he died. Another example is, In “half of a century” no one has disturbed the catacombs or found Fourtunado’s body. It shows that no one has found out what happened to Fortunato 50 years later. This also explains the reasoning why Montressor would tell his priest, because he would be very old by this time; old enough to be on his deathbed. To sum it up, because Montressor is confessing to the priest that he killed Fortunado, this narrative can be reliable.

I worked through the papers in between trimming shrubs, cleaning my bike chain, and cleaning out the basement.

The shrubs — didn’t L just trim those? Her chores on Saturday usually include getting crickets for her frog, shopping (she usually gets the week’s groceries on Fridays, but there’s always something more we need), and cleaning her room.

The bike chain — didn’t I just clean it? Bike maintenance is something I’ve never really enjoyed. It’s so tedious cleaning a chain, replacing cables, adjusting brakes, replacing tires. But the worst of it all is definitely chain cleaning. No matter how carefully I clean it, there’s always a bit of grime left behind. But nothing makes a bike look better than a spotless chain.

Today, I used a new degreaser, and I was fairly pleased with the results. Ultimately, what I’d like is an ultrasonic cleaner that I could just drop the chain into for a few minutes and then let dry. But in the meantime, I’ll use a degreasing solution and toothbrush.

Cleaning out the basement — there’s been a crate of old books that K will eventually take to Goodwill, and among the books are several of my college lit anthologies. I’ve kept them for so long because — well, I really don’t know why. I haven’t cracked one open in so long. I had them at school for a long time, but I’ve run out of shelf space and brought them hope.

That is a story in and of itself. Last year, the state of South Carolina provided each English teacher with $3,500 worth of independent reading books so we could have a classroom library of contemporary, high-interest books. But this year, things changed:

Effective August 1st, 2024, SC Regulation 43-170 requires teachers to produce a complete list of the Instructional Materials (including classroom library books) that are used in or available to a student in any given class, course, or program that is offered, supported, or sponsored by a school, or that are otherwise made available by any District employee to a student on school premises. That list shall be provided upon reasonable request by any parent/guardian of a student in the District.

Greenville County Schools Press Release

In short, we’re not to have any books that even hint at sex. It’s another last-gasp effort of the far right to maintain its stranglehold on young people’s minds, I say to myself. For me, it’s simply a headache, which is why I’ve closed my library: I haven’t made the list yet, and I have no idea when I’ll be able to. In the meantime, I posted a sign explaining the situation, and I look forward to Meet the Teacher night when all parents can see the signs because I’m going to make my presentation standing right beside one.

So I guess in a way, my Saturdays have come full circle.

Every year it’s the same: I’m going to do a better job encouraging and facilitating independent reading. And every year’s initial visit to the library starts out with that intention. And then reality sets in, deadlines for covering content loom, and the independent reading time slowly gets strangled.

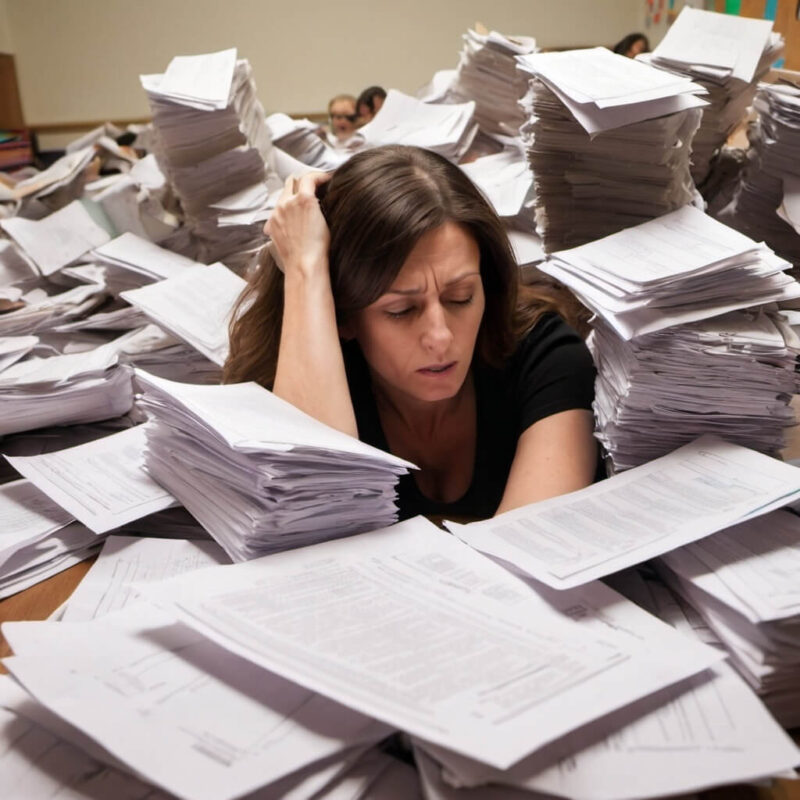

What’s on my mind lately? The amount of stuff I have to do:

And that really doesn’t cover everything — that’s just what I could list off the top of my head.

Is it any wonder so many teachers are burning out?

It was near the end of the school day, and the eighth-grade assistant principal pulled me out of my classroom to tell me something. “You’re going to get an email in a little bit that I don’t want you to read until you get home, relax, have some dinner, and then have a drink.”

I knew what was in that email from what she said. It’s something that has been bouncing around for a year or more and now has finally come to full fruition: South Carolina Regulation 43-170.

The email from our principle included a link to the official district explanation:

Effective August 1st, 2024, SC Regulation 43-170 requires teachers to produce a complete list of the Instructional Materials (including classroom library books) that are used in or available to a student in any given class, course, or program that is offered, supported, or sponsored by a school, or that are otherwise made available by any District employee to a student on school premises. That list shall be provided upon reasonable request by any parent/guardian of a student in the District.

What does this mean in practical terms? The principal spelled it out in no uncertain terms:

In short, all the materials we might give to a student in a given year.

Why?

Because there’s a concerted effort among teachers to turn as many students gay as possible and promote critical race theory every chance we get. We have so few other demands on us that, out of a sense of woke duty, we purposely spend time trying to turn kids gay and putting down whites. All mathematics word problems are set in San Francisco or must include some anti-white framing. History teachers eschew all periods of history except the Stonewall riots and contemporary history with a bent toward institutional racism. Science classes neglect all disciplines except genetics, and they only discuss the gay gene. Finally, we English teachers simply have students watch episodes of Will and Grace and write summaries of them.

In case anyone can’t tell (and those who proposed and promoted this law probably can’t tell), this is satire. I feel it necessary to state that upfront. None of this actually happens. That goes without saying, but just in case someone stumbles on this and uses it as proof that teachers are encouraging students to identify as gay, anti-white cats, I must say emphatically once again, this is not happening. When dealing with a blunt viewpoint, one must use blunt instruments.

If either of our kids expressed any interest in going into education, I would try my hardest to discourage them. I’ve come to wonder whether or not there is a conscious effort to destroy public education by placing such onerous demands on teachers that the majority of them quit so that the state can farm out education to private firms just like so many states have done with correctional institutions.

We’ve been in school for nearly two weeks now. It’s time for the honeymoon period to end, sending everything into a series of predictable unknowns: who is going to turn out the nearly-constant talker who, when redirected, grows aggressive and disrespectful? Who is going to become the example of a nearly-always bad mood? Who is going to start refusing to do much of anything?

Usually by this time of the year, I can see those students starting to let the cover drop and be their true selves. Last year was so tough there was no honeymoon period: we eighth-grade teachers could see it clearly the first day.

This year? I’m still waiting for them to appear.

I’m trying not to get too unrealistically optimistic about it. They’re sitting in my class for sure. They have to be: they’re always there.

But maybe, just maybe, not this year?

We all came back to school hoping this year would be better, hoping that some of the bureaucratic micro-managing the district is forcing on our school would lessen somewhat. Last year it was reports that disappeared into the netherworld, reflections that sat in shared Google Drive folders unread (at least nothing was said about them), data that was just useless numbers comparing (incoming cliche alert) apples to oranges. I wrote a sixteen-page document detailing all the problems with all the nonsense and sent it further up the food chain.

We thought — worst case scenario — that we would at least stay the same, that the bureaucracy would just level out and be consistent with last year’s level of paperwork. We hoped — best case scenario — that it would lessen at least a little. What we didn’t fear was that even more nonsense would get dumped on us, but perhaps we should have.

Perhaps I’m biased, but I feel the English department is getting the worst deal of all. We got new textbooks this year, and we were told we have to follow the district-provided pacing guide exactly and administer the district-provided final assessment without exception. We have to weave in other district requirements for our school without regard to whether the requirements help us become better teachers, without regard to whether the tool we have to use to do this is effective, without regard to any conflicts that might arise between this requirement and those thirteen-thousand other requirements.

Last year, we had two required “Common Formative Assessments” (God, how I hate that term now) each quarter. These were multiple-choice assessments administered through Mastery Connect (the worst program on the planet — a veritable cornucopia of bad design and worse implementation) that all grade-level English teachers had to give. They were to cover one standard. The problem is, all the questions from the district required question bank (we were told we were not allowed to create our own questions) accompany texts, so to get ten questions about one standard, we were having kids read five or six texts. An assessment we were told should take fifteen to twenty minutes invariable took the entire class period. Occasionally, the questions themselves were useless. If the standard was about finding evidence in a text, the question would often be a “Part B” question: “What is the best evidence to support the answer in ‘Part A’.” Where’s part A? Who knows? Buried somewhere in Mastery Connect.

This year, though, we’re required to do three CFAs (we love our acronyms in education) per quarter. That means three full class periods to administer an assessment that — here’s the real kicker — none of the English teachers even think is useful. It is, in short, a total waste of time so people further up the chain can write reports for people further up the chain from them.

All without asking for any feedback about the efficacy of the procedures we have in place.

Additionally, we now have a required CSA — Common Summative Assessment. We’ll just use the district required unit test the fulfill that requirement, but the first unit’s test is eighteen pages long. We estimate it will take at least two class periods. And right after taking that test, they’re taking a benchmark test (for which we shorten all classes that day to half an hour and give them the entire morning), which is essentially the same damn test.

And we’re to do that every single quarter.

That’s six or seven days per quarter just for assessments that we the teachers don’t even think are effective. That’s twenty-four to twenty-eight days of the school year. That’s 13-16% of the entire school year just for this asinine testing. And that doesn’t even take into consideration the other days for other alphabet soup tests. Taken altogether, we’re looking at nearly 20% of the school year spent taking useless multiple choice tests.

And when students don’t do as well as we think they should, do we step back and say, “Hey, what did we make the teachers do that could have contributed to this”? Of course not: teachers are the only variable in this whole equation that districts can boss around and legislatures can legislate, so we take the blame for everything.

If either of our children were thinking about going into education, I would tell them that I’m not paying for their college unless they do a double major and take a second degree in some other field so they have options for when they’re facing the situation that we’re facing. I’m fifty-one years old; this is only getting worse; I have few to no options but to keep plowing through it.

But after today, I’d almost kill for options outside the classroom.

“Mr. S, you’re my favorite teacher so far.” We were lining up this morning to head out for related arts (or “essentials” as the new nomenclature dictates — people in education love to rename things to show supposed progress and improvement), and he said this out of the blue.

“You’ve only had one class with each of your teachers so far,” I laughed. “How could you possibly form an opinion that fast?”

“Well, your class was the only class we actually did something in yesterday,” he clarified.

I am not one to begin the first day of school with a long lecture explaining all the ins and outs of my classroom procedures. Sure, I have a specific way I want students to turn in papers, but I’ll explain that when they have their first papers to turn in. Certainly, I want them to know about my website, which I work hard to keep updated daily, but I’ll show them that when I’ve created my first update so they realize firsthand how useful the site can be. Definitely I want them to understand how we’re going to get into groups for collaboration, and I want them to know where each group is to sit, but we’ll go over that when we get in groups for the first time. So the first day, I always make sure we work. We do some writing, some reading, some chatting. We work in groups; we work in pairs; we work individually.

And the second day, we go over procedures.

Are you kidding? We have too much material to cover! Any procedures I’ve neglected will have to wait until that first time we need it!

Last year’s first day — exactly one year ago — was a little strange. In here, I wrote it was a good day, but that was not entirely true. My two on-level classes were, in a word, hyper. Several students were immediately chatty, immediately disruptive, and there were several more students who fed into that. There was a bit of attitude at times, and while I tamped it all down quicky, it didn’t seem to bode well for the rest of the year.

I was right.

Last year’s eighth grade was tough. We’d heard they’d be tough from sixth-grade teachers; we’d heard they’d drive us to insanity from seventh-grade teachers; and we saw the difference immediately.

Most eighth-grade classes are pretty calm at first. Most eighth-grade students are reasonably relaxed those first days, trying not to push boundaries, trying to make a decent first impression. Those kids (rather, many of them) did not do this. And it was a harbinger of things to come.

“This year’s kids are better,” everyone said. We met them all today, and I would have to agree: a night-and-day difference.

One less stress.

Our kids started school with the usual excitement: the Girl is starting her senior year (how in the world is that possible?) while the Boy is starting seventh grade (how in the world is that possible?).

“Enjoy your last first day of school,” I said to her, though that’s not quite accurate. She’s planning on going into bio-engineering, and she’s already accepting/planning on getting a doctorate, so she has plenty more first days of school.

As for the Boy? A snippet of a conversation from a couple of weeks ago says it all: “You have to pay for college?! You have to pay to sit in school?!”

Our school district has a way of jostling teachers out of their comfort zones. Take this year, for example. We’ve known for a long time that we’ll have new standards for English. The logical way to let teachers transition to these new standards is to let them take their existing lesson plans and retool them as necessary to meet the new standards. True, they are, by and large, almost the same standards, but there are some new items on that list which will take some time to unpack and figure out how to teach. Perhaps letting us focus on that during the first year would be a good move.

We’re also getting new textbooks this year. This means that a lot of the stuff we’ve done in the past might not necessarily work with the new selections in the new textbook. A lot of it will, but not everything. The logical way to transition to this new textbook would be to give teachers a year or two to make the move over. After all, we’ll likely be using these books for six or eight years. We can take our time with transitioning and make sure we do a good job.

Or our district could manage these transitions as they actually chose to this year:

There are a lot of stressed teachers today. I had to talk an experienced teacher out of walking out and simply quitting today. This is her last year before retirement, and it’s not how she wanted to end her career. If she’d walked out, I wouldn’t have blamed her.

“Last year’s kids were a real challenge,” the seventh-grade teachers all admitted. And to be fair, they warned us about them this time last year: “This is some group!” We hear that a lot, and we put it down to a typical exaggeration: they’re never as troublesome as last year’s teachers make them out to be.

But last year, they were right. One-hundred percent accurate. Last year’s group was exhausting.

“This year is going to be so much calmer for you guys!” all the seventh-grade teachers have been reassuring us during these first days back. Today we met a lot of them.

It’s hard to tell after such a short exchange, but we are, indeed hopeful.

We started the day (as in right after roll) with a final fire drill. All the eighth-grade students went to the area by the basketball courts and lined up as always. Almost. The difference was immediately visible: only about a third of the students were there today.

After spending a little time outside, I had kids help me pack up all my books, which I have to do every single year, which is really a pain.

And we also said goodbye to a kid who changed everyone’s life on the eight-grade hall for the better.