K began preparing for our Easter party today, with some pate being the first task on the list. Some liver, some chicken, some spices, some baking — it will be amazing as always.

Of course, even if we didn’t have a calendar, we’d know it’s almost time for Easter with all the wisteria blooming here in the south. Is there a more perfect flower?

Got to see Pat Metheny perform live tonight.

Everything I expected, and more…

My English 8 students are beginning my favorite unit of the year: The Diary of Anne Frank. We’ll be acting out the play in class (at least some of it), reading actual diary entries and comparing them to the play, looking at how the authors use only dialog to develop the characters for the audience.

One of the things I love about this unit is that a lot of kids who might not otherwise be so eager to participate become a little more engaged, a little more focused.

And it’s just fun…

The view outside my window would make it clear even if my mental state didn’t:

Today was the first day back from spring break, and while I was pleased to see my students, I wasn’t as happy about having a regimented schedule.

Classes went well: everyone started a new unit (The Diary of Anne Frank and To Kill a Mockingbird), and everyone was relatively focused.

A good day, overall.

The Girl’s club team finished out their regional tournament run going 7-1. They only lost one game — the last one.

We’re still so proud of our girls’ accomplishment — second in regionals!

The Girl had a track meet today.

She’s only doing high jump hand javelin this year, so there was less running from event to event and more waiting.

She ended up taking g second in the javelin but with a distance that was a school record.

She won high jump but with a jump significantly lower than her personal best (which is also a school record).

Tournament and family game night.

Just to keep the streak going — we finished L’s new bed, did some stuff in the yard…

It’s not that we haven’t had the weather; we haven’t made the time…

wasn’t as success as day one. They lost their first game out in three sets. Since it’s bracket play, that put them immediately in the consolation bracket…

And the only picture from today: a 20+ year old picture from Slovakia on New Year’s Day.

We had our first of two tournaments during this spring break week today — at least, the pool play was today. Our girls are the number 4 ranked team in the tournament, and they were the highest-ranked team in their pool. They faced off first with a team from Myrtle Beach and dispatched them quickly: 25-12, 25-9. The Myrtle Beach team, ranked 18th in the tournament, just didn’t seem like they had their act together at all.

After a short break, they faced their second opponent, a team called Xcell, ranked 22. Our girls finished them off in straight sets as well. During the next break, the Myrtle Beach team played the second-highest-ranked team in our pool, a team from just north of Charlotte. They lost the first set 17-25 but won the second set 25-22. They dropped the third set 13-15, and I found myself thinking that if a team we beat 25-12, 25-9 took a set from the team from Charlotte, we shouldn’t have too much trouble with them.

After another game, our girls faced off against them, the two undefeated teams in the pool. The first set was close for the first ten points each or so, but then our girls started pulling away and ended up winning 25-19.

The second set, our girls started falling apart a little, and then a lot. Soon, they were down 6-13. K was watching a stream of the game from home, and she went to the laundry room to do some work. Our girls decided to get some work done as well and went on a 10-1 run. From that amazing 16-14 comeback, they just kept plowing ahead, winning in the end 25-20. That means from 6-13 to the final 25-20 result, our girls went on a 19-7 run.

So they were the only undefeated team in the pool. They’ll start tomorrow in the early afternoon.

And the shocker: I decided not to take a camera today and simply enjoy the moments.

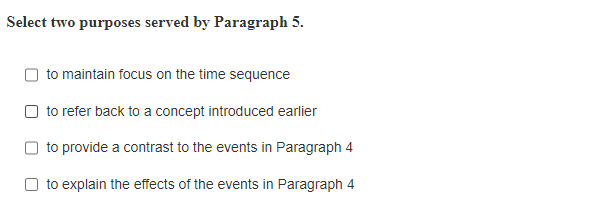

Over the last few years, Greenville County Schools has become increasingly dependent on Mastery Connect (MC) as its primary summative assessment tool. Over the last two years, GCS has been pushing for increased use of MC as its primary formative assessment tool as well. While I am often an early adopter of new technology, and although I am an advocate for technology-assessed assessment, I cannot share GCS’s enthusiastic support of Mastery Connect, and I have serious concerns about its effectiveness as an assessment tool: because of its poor design and ineffectively vetted questions, the program, instead of helping students and teachers, only ends up frustrating and harming them.

Using Mastery Connect with my students is, quite honestly and without hyperbole, the worst online experience I have ever had. I am not an advocate of the ever-increasing amount of testing we are required to implement, but having to do it on such a poorly-designed platform as Mastery Connect makes it even more difficult and only adds to my frustration.

I don’t know where MC’s developers learned User Interface (UI) design. It is as if they looked at a compilation of all the best practices of UI design of the last two decades and developed ideas in complete contradiction to them. If there were awards for the worst UI design in the industry, Mastery Connect would win in every possible category.

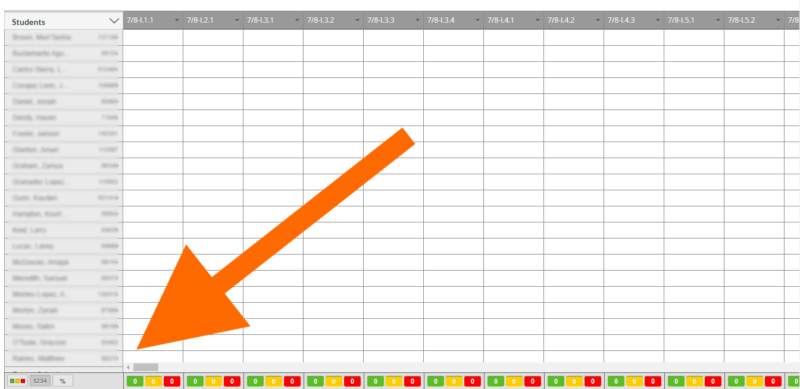

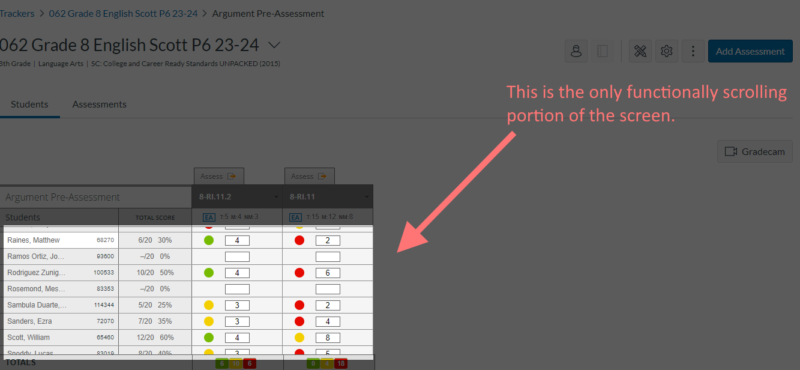

Note in the above image that there are more students in this particular class (or “Tracker” as you so cleverly call it). However, the only scroll bar on the right controls the scrolling of the whole screen. To get to the secondary scroll bar, I have to use the bottom scroll bar to move all the way over to the right before I can see the scroll bar. This means that to see data for students at the bottom of my list, I have to first scroll all the way to the right, then scroll down, then scroll back to the left to return to my original position. Not only that, but the secondary vertical scroll bar almost invisible. My other alternative is to change the sorting order to see the students at the bottom.

This is such a ridiculous UI design choice that it seems more to be a literal textbook example of how not to create a user interface than a serious effort to create a useful and easily navigable tool for teachers.

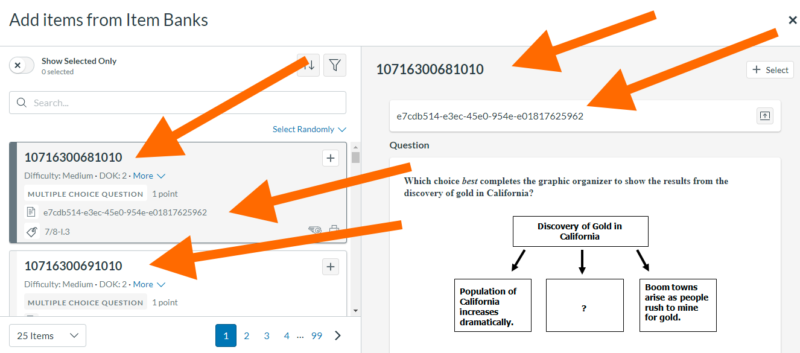

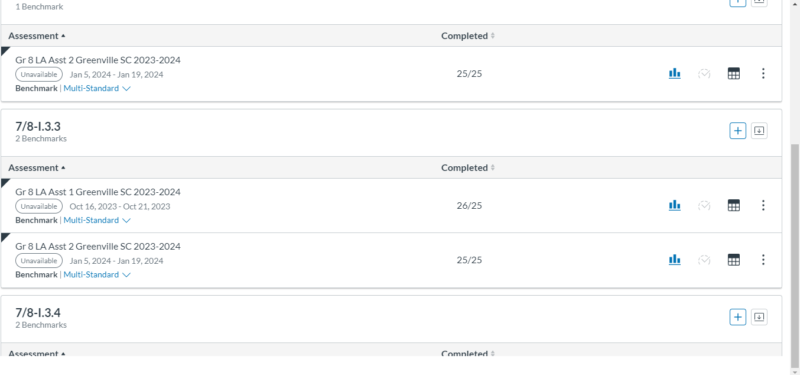

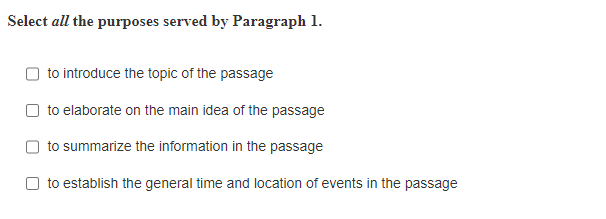

When we get to the assessment creation screen, we see even more poor design. The information Mastery Connect provides me about a given text is collection of completely useless numbers:

There is no indication about the text for a given question, no indication about what the actual question is. Instead, it’s a series of seemingly arbitrary numbers.

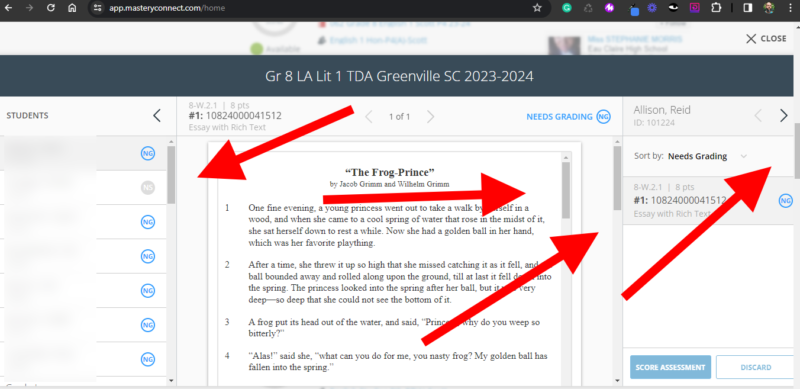

Finally, once I try look at the completed assessment, I have even more challenges.

To navigate to see the students work, I have to deal with four scroll bars. Four! If there were an award for most inept UI design, this would have to be the hands-down winner. For twenty years now basic UI design best practice has always been to limit the number of scroll bars because the more there are on a page, the more inefficient and frustrating the user experience.

The fact that the district has made Mastery Connect the center of its assessment protocol given this bad design that makes it difficult for me even to access information is so frustrating as to make me think that perhaps it wasn’t the program itself that sold the district on spending this much money on such a horrific program.

When I want to look at the results of the assessment, there’s another significant problem: the fast majority of the screen is frozen while only a small corner (less than 25% of the screen) scrolls.

The results of the assessment are visible in the non-shaded portion of the screen. The rest of the screen stays stationary, as if there is a small screen in a screen. Not only that, but that portion of the does not include any indication that it does scroll: a user only happens to discover this if the cursor is in the lower portion of the screen and then the user presses the arrow-down button. Otherwise, a user is just going to face increasing frustration at not being able to view past the first seven students in a given tracker.

A further problem arises when trying to view assessments group by standards. In the screen shot below, it’s clear that while the scroll bar is at its lowest extreme, there is still material not visible on the page. How one can access that information remains a mystery to me.

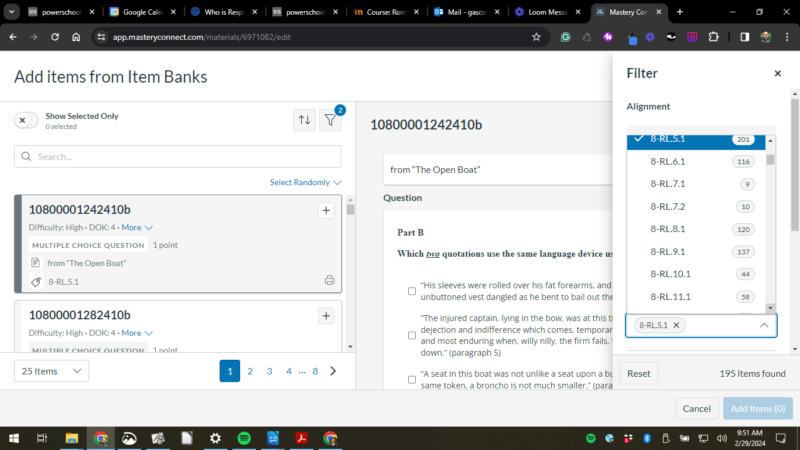

When creating a CFA or even deciding on which standard to assess for the CFA, it would be useful to be able to browse questions without first creating an assessment. This, however, is not possible. Browsing for content in a given program seems like such a basic function that all websites have that we take it for granted, but it’s not available in MC.

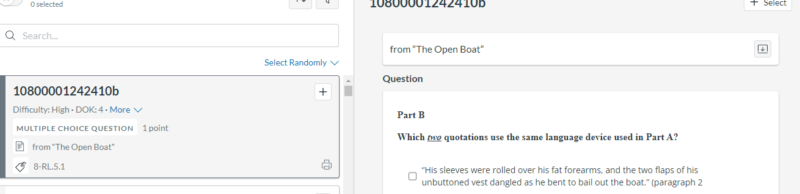

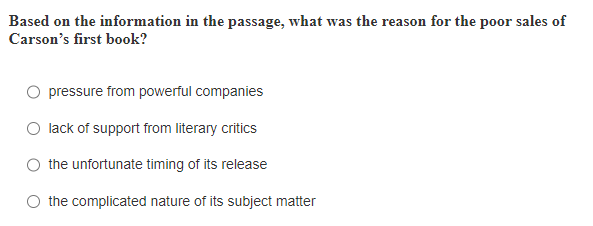

Many of the questions are two-part queries, with the second part usually dealing with standard 5.1, which for both literary and informational texts is the same: “Cite the evidence that most strongly supports an analysis of what the text says explicitly as well as inferences drawn from the text.” The problem is, when one is creating a formative assessment to target that one standard and one uses the filter view to restrict Mastery Connect to that single standard, the “Part B” question shows up with no indication of what “Part A” might be:

One teacher explained to me that she found a way to fiddle with the question number to trick the program into showing more questions about a given text. Again we’re working to overcome the deficiencies of the program.

This is especially problematic for a CFA because we are trying to focus on a single standard. Standard 5.1 is arguably the most foundational of all standards, and this is even reflected in the number of questions per standard: RL-5.1 and RI-5.1 have vastly more questions than any other standard, yet they are usually tied to another question, and it is all but impossible to figure out what that question is.

There’s a certain irony in the fact that the most foundational standard is all but impossible to assess in isolation.

Finally, some standards are grouped together into their parent standard, and this can create significant issues when trying to create an assessment that includes one standard but excludes another. For instance, questions about RI-11.1 and RI-11.2 are combined into RI-11.

RI-11.1 is about text structure. (Strangely, so, too, is RI-8.2, a fact districts have been pointing out to the state department since the standards were released. If MC were the kind of program the district touts it to be, the programmers would have realized this and dealt with it within the program in some efficient manner.) Standard RI-11.2 deals with evaluating an argument. It says a lot about the state department that they grouped these two ideas together under a main standard of RI-11, but it says even more about MC that they then take these two standards, which are radically different, and drop them into the same category. This means that when trying to make a CFA that deals with evaluating an argument, one has to deal with the fact that many of the questions MC provides in its search/filter results will have to do with a topic completely unrelated.

There are almost no ELA questions in the MC database that are not text-based. Even a standard like RI-8.2 (“Analyze the impact of text features and structures on authors’ similar ideas or claims about the same topic,” which, again, is identical, word for word,to RI-11.1) has entire essay-length questions. If one wants 10 questions about standard RI-8.2/RI-11.1, instead of delivering ten, single-paragraph questions asking students to identify the text text in play, creating such an assessment would likely result in eight, multi-paragraph text for ten questions. This means that such an assessment would take an entire class period for students to complete.

Indeed, it has taken entire class periods. Below are data regarding the time required to implement CFAs during the third quarter of the 2023/2024 school year. Periods 4 and 5 were English I Honors classes; periods 6 and 7 were English 8 Studies classes.

| Period | Total | Students | Man-Minutes/Hours |

| 4 | 0:26:00 | 24 | 10:24:00 |

| 5 | 0:25:00 | 29 | 12:05:00 |

| 6 | 1:46:00 | 29 | 51:14:00 |

| 7 | 1:43:00 | 29 | 49:47:00 |

| 123:30:00 |

The roughly one hour and forty minutes per English 8 class amounts to two entire class periods spent on Mastery Connect. That is two class periods of instruction lost because of the assessments Master Connect creates. Multiplied out for all students, it comes to an astound 123 man-hours spent on Mastery Connect. This does not take into consideration other, district-mandated testing such as benchmarks and TDAs, all done through MC.

It’s difficult to comprehend just what an impact this use of time has on students’ learning. The 123 man-hours spent on MC equates to three weeks of eighth-hour-a-day work. That is a ridiculous and unacceptable waste of student time, and that is just for one team’s students. If we take an average of the time spent per class, that comes to 1:05. Multiple that across the school, and we arrive at a jaw-dropping 975 man-hours spent in the whole school just for English MC work. If those numbers carry over to the other classes required to use MC for its assessments, the total time spent on MC assessments borders on ludicrous: 3,900 man-hours. That is just for CFAs. Factoring in benchmarks and TDAs administered during the third quarter and that number likely exceeds 10,000 man-hours spent on Mastery Connect.

A common contention among virtuosos of any given craft is that it takes about 10,000 hours to master that skill, whether it be playing the violin or painting pictures. That’s the amount of time we’re spending in our school for one quarter just to assess students, and Master Connect’s inefficiency only compound the problem.

This excessive time spent on Mastery Connect skews the data as well as wastes time. Students positively dread using Mastery Connect: on hearing that we are about to complete another CFA, students groan and complain. There is no way data collected under such conditions can possibly be high quality. Add to it the overall lack of quality in the questions themselves and I am left wondering why our district is spending so much time and money on a program of such dubious quality.

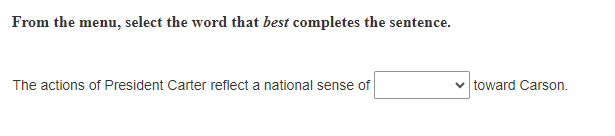

Most worrying for me is the baffling fact that many of the district-approved, supposedly-vetted questions about a given standard have nothing whatsoever to do with the given standard.

As an example, consider the following questions Mastery Connect has classified as having to do with text structure. The standards in question read:

Some of the questions are clearly measuring text structures:

Other questions have only a tenuous connection at best:

The above question has, at its heart, an implicit text structure, but it is not a direct question about text structures but instead deals with simply reading the text and figuring out which comes first, second or third.

The questions above deal with the purpose of the given passage, and text structure is certainly connected to the purpose of a given passage, and that purpose will change as the text structure changes.

Some questions, however, have absolutely nothing to do with the standard:

The preceding question has nothing to do with text structures, and it is in no way connected to or dependent upon an understanding of text structures and their role in a given text. It is at best a DOK 2 question asking students to infer from a given passage, thus making it an application of RI-5.1; at worst it is a DOK 1 question connected to no standard.

The question above is another example that has nothing at all to do with text structure. Instead, this might be a question about RI-9.1 (Determine the meaning of a word or phrase using the overall meaning of a text or a word’s position or function) or perhaps RI-9.2 (Determine or clarify the meaning of a word or phrase using knowledge of word patterns, origins, bases, and affixes). It cannot be, in any real sense, a question about text structures, and it requires no knowledge of text structures to answer.

The preceding question has more to do with DOK 3-level inferences than text structure.

Teachers are told to use this bank and not that bank, but why should the question bank matter? If the program is worth the money the district is paying for it, we shouldn’t have to concern ourselves about the question bank. A question about standard X should be a question about standard X. If one question bank is better than another, that speaks more to Mastery Connect’s quality control and vetting process than anything else.

Due to the poor UI design and the generally poor quality of images used within questions, students with visual impairments are at a severe disadvantage when using Mastery Connect. Images can be blurry and hard to read, and the magnification tool is inadequate for students with profound vision impairment.

I have significant concerns about the efficacy and wisdom of using Mastery Connect as an assessment tool. It’s bad enough that it’s so poorly designed that it appears the developers tried to create a good example of bad user interface design: working with MC is, from a practical standpoint, a nightmare. Add to it the fact that the data it produces for one assessment is often incompatible with data from a different assessment and one would have to wonder why any district would choose to use this program let alone pay to use the program. Neither of those two issues, though, is most significant concern I have.

When comparing CFAs to benchmarks, we are comparing apples to hub caps. The CFAs measure mastery of a given standard or group of standards. The resulting data are not presented as a percentage correct but rather as a scale: Mastery, Near-Mastery, Remediation. However, the benchmarks do operate on a percentage correct, so we’re comparing verbal scale to a percentage when comparing benchmarks and CFAs. These data are completely incompatible with each other, and it renders moot the entire exercise of data analysis. Analytics only makes sense when comparing compatable data. To compare a percentage to a verbal scale makes no sense because it is literally comparing a number to a word. Any “insights” derived from such a comparison would be spurious at best. To suggest that teachers should use this “data” to guide educational choices is absurd. It would be akin to asking a traveler to use a map produced by a candle maker.

While my concerns about proprietary data are tangential to the larger issue of the district’s self-imposed dependency on Mastery Connect, they do constitute a significant concern I have about Mastery Connect’s data in general. Because a for-profit private company creates the benchmark questions, the questions are inaccessible to teachers. The only information we teachers receive about a given question is the DOK and the standard. Often, the data is relatively useless because of the broad nature of the standards.

I’ve already mentioned the amalgamation of standards RI-11.1 and RI-11.2:

If I see that a high percentage of students missed a question on RI-11, I have no clear idea of what the students didn’t understand. It could be a text structure question; it could be a question about evaluating a claim; it might be a question about assessing evidence; it could be recognizing irrelevant evidence. This information is useless.

Occasionally, the results of a given question are simply puzzling. In a recent benchmark there were two questions about standard 8-L.4.1.b which is that students will “form and use verbs in the active and passive voice.” At the time of the benchmark, I had not covered the topic in any class, yet the results were puzzling:

| P4 (English I Honors) | P5 (English I Honors) | P6 (English 8) |

| 72 | 69 | 36 |

| 72 | 76 | 52 |

For the second question about active/passive, the results among the English 8 students were vastly better, especially in my inclusion class (period 7). However, among English I students, the results were consistently high despite having never covered that standard. It would have been useful to see why the students did so much better on one question than another given the fact that no class had covered the standard.

According to the author, Bishop Robert Barron, this book is intended to help bring Catholics back to the fold in regards to the Catholic teaching that despite all appearances to the contrary, the cracker and wine of Mass become the body and blood of Jesus.

How does he do this? Does he deal with the simple fact that one reason a lot of people don’t believe this literal-bronze-age nonsense is because we’ve learned a bit about the nature of reality in the past two thousand years, and we understand that the classic explanation of substance and accident (a la Aristotle) is really just an ancient attempt at explaining the world which has now evolved into current scientific understanding? No. Does he deal with the Church’s own admission that nothing physically changes? Even more no. So how does he deal with it? The only way he can — the best way Catholics deal with anything in their faith that inherently makes no sense. He piles on the metaphors.

But why then the prohibition [in the Garden of Eden]? Why is the tree of the knowledge of good and evil forbidden to them? The fundamental determination of good and evil remains, necessarily, the prerogative of God alone, since God is, himself, the ultimate good. To seize this knowledge, therefore, is to claim divinity for oneself-and this is the one thing that a creature can never do and thus should never try. To do so is to place oneself in a metaphysical contradiction, interrupting thereby the loop of grace and ruining the sacrum convivium (sacred banquet). Indeed, if we turn ourselves into God, then the link that ought to connect us, through God, to the rest of creation is lost, and we find ourselves alone. This is, in the biblical reading, precisely what happens. Beguiled by the serpent’s suggestion that God is secretly jealous of his human creatures, Eve and Adam ate of the fruit of the tree of the knowledge of good and evil. They seized at godliness that they might not be dominated by God, and they found themselves, as a consequence, expelled from the place of joy. Moreover, as the conversation between God and his sinful creatures makes plain, this “original.”

What is this saying? Does this amount to anything other than just a rehashing of the story with some new metaphors thrown it? I don’t see anything more than that.

This complex symbolic narrative is meant to explain the nature of sin as it plays itself out across the ages and even now. God wants us to eat and drink in communion with him and our fellow creatures, but our own fear and pride break up the party. God wants us gathered around him in gratitude and love, but our resistance results in scattering, isolation, violence, and recrimination. God wants the sacred meal; we want to eat alone and on our terms.

Again, this is just metaphor. It doesn’t mean anything because it refers only to some story in a book that is itself of dubious historical accuracy (read: nonsense). Even Barron would suggest that the story of the Garden of Eden is really more metaphor than anything else, so this is all metaphor about another metaphor.

[T]he salvation wrought through Israel and Jesus and made present in the Mass has to do with the healing of the world. We see this dimension especially in the gifts of bread and wine presented at the offertory. To speak of bread is to speak, implicitly, of soil, seed, grain, and sunshine that crossed ninety million miles of space; to speak of wine is to speak, indirectly, of vine, earth, nutrients, storm clouds, and rainwater. To mention earth and sun is to allude to the solar system of which they are a part, and to invoke the solar system is to assume the galaxy of which it is a portion, and to refer to the galaxy is to hint at the unfathomable realities that condition the structure of the measurable universe. Therefore, when these gifts are brought forward, it is as though the whole of creation is placed on the altar before the Lord. In the older Tridentine liturgy, the priest would make this presentation facing the east, the direction of the rising sun, signaling that the Church’s prayer was on behalf not simply of the people gathered in that place but of the cosmos itself.

We can’t be surprised at the degree to which Barron relies on metaphor to describe the rituals of the Catholic Mass since he can’t even describe his god in straight terms:

God is, in his ownmost reality, not a monolith but a communion of persons. From all eternity, the Father speaks himself, and this Word that he utters is the Son. A perfect image of his Father, the Son shares fully the actuality of the Father: unity, omniscience, omnipresence, spiritual power. This means that, as the Father gazes at the Son, the Son gazes back at the Father. Since each is utterly beautiful, the Father falls in love with the Son and the Son with the Father-and they sigh forth their mutual love. This holy breath (Spiritus Sanctus) is the Holy Spirit. These three “persons” are distinct, yet they do not constitute three Gods.

The father “speaks himself”? What could that possibly mean? He insists that “as the Father gazes at the Son, the Son gazes back at the Father.” How can spiritual beings gaze at each other? It makes no sense. And then they “sigh forth their mutual love.” What, do the Father and the Son breath? What are they sighing? They don’t even have bodies — how can this make any sense? That “holy breath” is the third part of this weird god? And yet it’s one god? In an attempt to use metaphor to explain the inherently self-contradictory notion that three is one and one is three, Barron just ends up uttering inane deepities.